File Systems on MonARCH

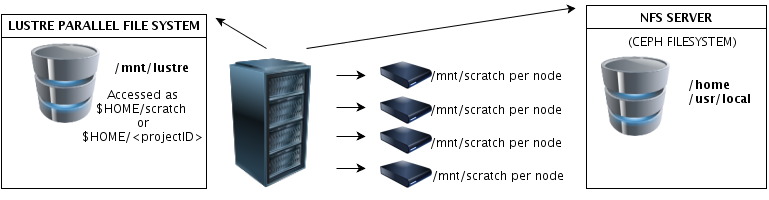

The MonARCH File System is arranged into 3 major parts:

- your home directory

- your project directory

- project scratch space

Also, when a job is running via the Slurm scheduler, it is also given a unique /tmp and /var/tmp directory on a large file system local to the machine. See Extra Features Available for more information.

| File System | Quota | > Description |

|---|---|---|

| /home | 5G per user | NFS mount (CEPH) |

| /mnt/lustre | 50GB or 500GB. See text | LUSTRE |

| /mnt/scratch | No quota but disks are >1TB each. (See below re use) | Local to server |

| /tmp /var/tmp | Same as /mnt/scratch. Or NVMe disk if present | Local to server |

In your home directory you should find a number of symbolic links

(ls -al). These point to the project space and scratch you have been

allocated. You can request additional space via mcc-help@monash.edu

For example if your project name is YourProject001 you will see the

following two links:

ls -al ~/

YourProject001 -> /projects/YourProject001

YourProject001_scratch -> /scratch/YourProject001

The first link points to your project data that is backed up weekly

(as with your home directory). The second one points to the faster

system scratch space, which is not backed up and is used for temporary

data.

File Systems

/home

This is where the user's home directory resides. It is a NFS-mounted CEPH file system. Currently (as of July, 2016), users' home directories are backed up nightly to a disk/tape archival system (See https://vicnode.org.au/products-4/vault-tape-mon/ for more information). Any changes in files are kept for 30 days.

/mnt/lustre

This is our high-performance system-wide parallel LUSTRE file system, which has over 300TB of capacity. Each user has two links in their home directory that point to LUSTRE.

- $HOME/scratch This is a link to a scratch directory which is unique for each user. This is for temporary use during the running of a program.

- $HOME/<projectID> This is a pointer to a project directory, i.e. to store input and output files. Users within a group will have the same project directory. An example of a project directory is: p2015120001.

The LUSTRE file system is NOT backed up at the moment. A backup / archive solution is in preparation. Currently, each project has a default soft quota of 500 GB. This project requires a supervisor to lead. A PhD student may request a project space of 50 GB. We run a periodic check of disk usage to determine which projects have exceeded their quotas, and we will contact the affected users to resolve this. This avoids jobs being aborted due to exceeding disk quotas. Users may requests an increase in project quotas, and these requests will be decided on a case-by-case basis.

/mnt/scratch

This is a scratch space that exists on each compute node. It uses the local hard disk of the machine. The /mnt/scratch partition is different on each compute node, i.e. the /mnt/scratch on the MonARCH login node is different to the one on the compute nodes. For example, if a Slurm job running on a compute node writes to its /mnt/scratch, you will not see any of the files when you are on the MonARCH login node.

These disks are suitable for small, frequent IO operations. Any files written here should be copied to your /home or /mnt/lustre file systems before the job is finished. The disks are not backed up.

PLEASE CLEAN UP YOUR FILES AFTER USE. You should not assume the files will persist after your job has finished. If OS file system fills up, the machine will hang or crash.

What to put on each file system?

That is up to you, but as a general guide:

Home directory (~2GB)

This should contain all of your hidden files and configuration files. Things like personal settings for editors and other programs can go here. The home directory is backed up.

Project directory (Shared with everyone on your project)

This area is not backed up but is faster and has more space. It should

contain all of your input data, a copy of all your job scripts and final

output data. It might be worth while to keep copies of any spreadsheets

you might use to analyse results or any matlab or mathematica

scripts/programs here as well. Basically anything that would be hard to

regenerate.

Generally each user in the project should create a subdirectory in the project folder for themselves.

Scratch directory (Shared with everyone on your project)

This area is not backed up. Generally all your intermediate data will go here. Anything that you can recreate by submitting a job script and waiting (even the job runs for quite a long time) can go here. Anything that you can't regenerate automatically, things that you have to think about and create rather than asking the computer to calculate should go in the project directory because that is backed up.

Disk Quotas

MonARCH uses soft and hard quota limits.

A soft limit allows you to have more than your allocated space for a

short period of "grace" time (in days). After the grace time has been

exceeded, the filesystem will prevent further files being added until

the excess is removed.

A hard limit prevents further files being added.

The quotas on the Project directories are much larger than the space users get in their own Home directories, so it is much better to use the Project space. Also the project space is available for all members of your project, so you can use it to share data with your colleagues.

Please use the mon_user_info command to view your usage and quota on all

your file systems.

Quotas come in two forms:

- The size (GB) of the folder

- The number of inodes inside the folder. In simple terms, one file is one inode

You can exceed your quota on both counts.

If you need higher allocation for project storage spaces, please send your request to mcc-help@monash.edu

System Backups and File Recovery

The data storage on MonARCH is based on Lustre which distributes data across a number of disks and provides a mechanism to manage disk failures. This means that the MonARCH file system is fault tolerant and provides a high level of data protection.

In addition to this, the home directories are backed up to tape every night. This means that if you create a file on Tuesday, on the following day there will be a copy of the file stored in the backup system. Backups are kept for 30 days, before the system permanently removes the file to make space for new data.

| File System | Type | Quota | Policy | How long are backups kept? |

|---|---|---|---|---|

| Home Directory | NFS | Yes | Daily Backup | 30 days |

| Project Directory | Lustre | No | No | |

| Scratch Directory | Lustre | No | No |

File Recovery Procedure

If you delete a file/directory by mistake, you will be able to recover the file by following the following the following procedure:

- Email a request to mcc-help@monash.edu

- Please include the the full path to the missing data, as well as information on when it was last seen and when it was deleted

- We will be able to restore files modified within the 30 day window. Beyond that time, any changes in the file will be lost.

The project scratch space is not backed up. Files are purged from this space as new space is required.

Storage outside of MonARCH

With your project, you have an allocation of storage on its high performance Lustre file system. This storage space is intended for data analyses and has a limited capacity. For large-scale, secure, and long-term research data storage, Monash University has the following offerings available through VicNode:

Vault, primarily used as archive, is a tape-based system specifically for long-term storage; this is best used to free up space on your MonARCH project, allowing for more data to be staged into your project for analyses. For further information, please visit: https://vicnode.org.au/products-4/vault-tape-mon/Market, is backed-up storage intended for active data sets and is accessible through the Windows, Linux, or Mac desktop environments at your research laboratory for convenient sharing of data files. For further information, please visit: https://vicnode.org.au/products-4/market-mon

We invite all MonARCH project leaders to kindly make a storage request to mcc-help@monash.edu, which we will forward to VicNode.

All additional storage requests can be lodged with the Research Storage team via the Data Dashboard or contacting researchdata@monash.edu

Instructions to access Ceph Market

The Market allocation is presented as a CIFS share with a given name,

usually of the form: RDS-R-<Faculty>-<Name>. This share can be mounted

within a Desktop session as follows:

-

Open a Terminal window within your Desktop session and issue this command:

gvfs-mount smb://storage.erc.monash.edu.au/shares/<sharename>- Replace the

<sharename>with the one provided by your allocation; - Enter your Monash ID (previously known as Authcate) username, when prompted;

- enter MONASH when prompted to enter the "Domain"; and

- finally your Monash ID password on the "Password" prompt.

infogvfs-mount is not available on MonARCH login nodes, use desktop (Strudel) to access the share.

- Replace the

-

If successful, the mounted share will be visible through the file browser. If the user is not a member of the group, an "access denied" message will be displayed.

-

It is best to cleanly

unmountthe share when it is no longer needed, by using this command:

gvfs-mount -e smb://storage.erc.monash.edu.au/shares/<sharename>

However, the share will be automatically unmounted once the desktop session terminates.

The collection owner will/should be able to add and/or remove

collaborators who can mount the share; through the

eSolutions Group Management page. On this page, a list

of shares that you have admin privileges will appear, each of these shares will appear as: RDS-R-<Faculty>-<Name>-Shared.

It is a known issue that the available storage for the share is incorrectly reported. Users are advised to simply ignore the warning, and allow a copy/move to proceed. We are unable to add non-Monash users to mount a share, since this authenticates against the Monash AD.

Instructions to access Vault

Coming soon.

In the meantime, please contact: mcc-help@monash.edu.

Extra Features Available

The following features are implemented using Slurm built-in technologies.

/tmp directory

During a running Slurm job, the /tmp and /var/tmp directories on each running slurm job are

mapped to a unique portion of a local filesystem disk ( e.g. /mnt/scratch ) and this

is larger than normal /tmp allocations that you might see if you had

logged into the computer via ssh.

So if you ssh into a node with a running job, the /tmp file system you see in the shell will be different to the /tmp that the job sees.

Please contact the help desk if you need more info on this.

Be aware this /tmp will be deleted after the Slurm job ends. It is

unique to your Slurm JOBID. If you need any data files stored by your

program in /tmp, please move it to your file system before

before the job ends.

Non-Volatile Memory Express (NVMe) Disks

The Slurm job will automatically use an NVMe drive as /tmp if there is one available, which means you may expect faster disk access on those nodes. Some of our mk machines contain NVMe drives.